Acumatica Summit 2026: Moving ERP From Potential to Impact

All Blogs

Acumatica Ascent 2026 is Acumatica’s first-ever, partner-only learning event. If you’re ready to take your...

For agribusiness leaders, growth in their complex industry has given rise to an important question: should...

Acumatica Summit 2026 brought with it our annual Hackathon, and it delivered jaw-dropping, AI-focused appl...

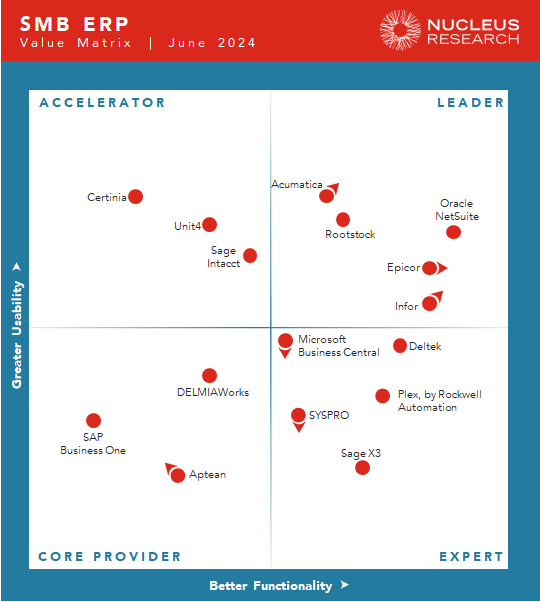

As an SMB, you have enough on your plate without having to worry about finding, affording, and implementin...

With World of Concrete over, Acumatica’s Joel Hoffman provides a look into what took place at the annual i...

Acumatica’s ninth Women in Technology Luncheon and very first WiT Networking Lounge took place at Acumatic...

How do Acumatica integrations seamlessly connect all your business apps, and how does this benefit you? We...

Acumatica Summit 2026 has come and gone, but its impact will be felt for years to come—especially for our ...

Unable to attend Acumatica Summit 2026 but want to learn what Acumatica has been doing and what the award-...

It’s time to reveal the finalists for Acumatica’s annual cloud ERP awards. Here are the partners who made ...

Canada (English)

Canada (English)

Colombia

Colombia

Caribbean and Puerto Rico

Caribbean and Puerto Rico

Ecuador

Ecuador

India

India

Indonesia

Indonesia

Ireland

Ireland

Malaysia

Malaysia

Mexico

Mexico

Panama

Panama

Peru

Peru

Philippines

Philippines

Singapore

Singapore

South Africa

South Africa

Sri Lanka

Sri Lanka

Thailand

Thailand

United Kingdom

United Kingdom

United States

United States